- resolv.conf vs. Claus - 1:0 (11 Oct 2012)

- Downloading streams on the command-line (11 Oct 2012)

- Memo from git-svn: Could not unmemoize! (25 Oct 2011)

- Heisenberg uncertainties in Thunderbird: Both online and offline at the same time (02 Sep 2011)

- NetBeans vs. Cygwin vs. Subversion (24 Apr 2010)

- Thou Shalt Honor RFC-822, Or Not Read Email At All (08 Nov 2009)

- TWiki, KinoSearch and Office 2007 documents (20 Jul 2009)

- Java-Forum Stuttgart (06 Jul 2009)

- Speeding through the crisis (22 Apr 2009)

- mod_ntlm versus long user names (06 Mar 2009)

- Honey, where did you put the wireless LAN cable? (13 Jan 2007)

- Getting organized (21.1.2006)

resolv.conf vs. Claus - 1:0 (11 Oct 2012)

/etc/resolv.conf. On my system, this file sometimes disappears, and sometimes it loses its previous entries.

So far, I have learned that Ubuntu 12.04 indeed introduced a new approach of handling DNS resolution and in particular

the resolv.conf file. If my understanding is correct, whenever the system finds a DHCP server, it is supposed to

re-create the /etc/resolv.conf file using DNS information it receives via DHCP.

The discussion at http://askubuntu.com/questions/130452/how-do-i-add-a-dns-server-via-resolv-conf hints at similar

problems. But I guess I do not know enough about Ubuntu's networking internals to really understand what is going on,

unfortunately  The following voodoo script sometimes helps me to resurrect

The following voodoo script sometimes helps me to resurrect /etc/resolv.conf with sufficient DNS information

in it. But it drives me mad that I don't have the slightest clue what I am doing there. If you read this and feel

an urge to slap your forehead, feel free to consider me a raving idiot, but do drop me a line to help me educate myself

on this issue. Thanks.

#! /bin/bash

pushd /etc

if [[ -r resolv.conf ]]; then

if [[ ! -L resolv.conf ]]; then

mv resolv.conf /run/resolvconf

ln -s /run/resolvconf/resolv.conf

fi

fi

popd

resolvconf --enable-updates

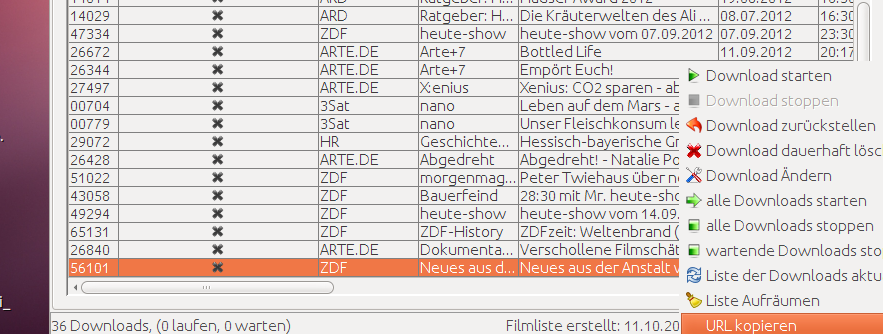

Downloading streams on the command-line (11 Oct 2012)

On Ubuntu, rtmpdump then fills the gap for me, for example:

On Ubuntu, rtmpdump then fills the gap for me, for example:

rtmpdump -r 'rtmpt://foo/bla/fasel/foo.mp4' -o foo.mp4

Memo from git-svn: Could not unmemoize! (25 Oct 2011)

Byte order is not compatible at ../../lib/Storable.pm (autosplit into ../../lib/auto/Storable/_retrieve.al) line 380, at /usr/share/perl/5.12/Memoize/Storable.pm line 21 Could not unmemoize function `lookup_svn_merge', because it was not memoized to begin with at /usr/lib/git-core/git-svn line 3213 END failed--call queue aborted at /usr/lib/git-core/git-svn line 40.Apparently, git-svn maintains cached meta information in

.git/svn/.caches, which the Perl Storable.pm library choked on. I

guess what happened is that Ubuntu 11.10 came with a more recent version of Perl and the Perl libraries,

and the new version of Storable.pm did not know how to handle data stored with older versions. Again, just a guess.

Discussions at http://lists.debian.org/debian-perl/2011/05/msg00023.html, http://lists.debian.org/debian-perl/2011/05/msg00026.html

and http://bugs.debian.org/cgi-bin/bugreport.cgi?bug=618875 suggest this may in fact a bug in the git-svn scripts, but I did

not venture to analyse the problem any further.

Anyway, the following took care of the problem for me:

rm -rf .git/svn/.caches

Heisenberg uncertainties in Thunderbird: Both online and offline at the same time (02 Sep 2011)

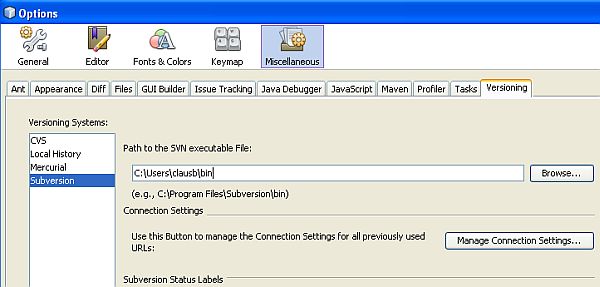

NetBeans vs. Cygwin vs. Subversion (24 Apr 2010)

svn version compiled for Cygwin when it finds it in your PATH -

and that's where trouble starts. Some related bug reports:

108577,

108536,

124537,

124343,

108069,

144021.

Fortunately, it is simple to work around the problem, as NetBeans can either download

an integrated SVN client, or you can configure it to use

plain vanilla Windows versions

of svn.

That, of course, was way too simple for me. I wanted to know what really kept my

preferred IDE from having polite conversations with Cygwin executables.

As a first step, I ran tests with the "IDE Log" window open (accessible from NetBeans'

"View" menu). I also cranked up NetBeans logging levels; example:

That, of course, was way too simple for me. I wanted to know what really kept my

preferred IDE from having polite conversations with Cygwin executables.

As a first step, I ran tests with the "IDE Log" window open (accessible from NetBeans'

"View" menu). I also cranked up NetBeans logging levels; example:

netbeans.exe -J-Dorg.netbeans.modules.subversion.level=1From the logging output, it looked like the Cygwin version of the

svn client fails because

NetBeans passes file paths in Windows notation, i.e. the paths contain backslashes.

I didn't want to mess with NetBeans code, so just for laughs, I built a trivial interceptor

tool which converts paths into UNIX

notation and then calls the original Cygwin svn.exe. This took me a little further, but it wasn't

sufficient. For example, NetBeans often runs the svn client like this:

svn info --targets sometempfile --non-interactive....And the temporary file

sometempfile contains additional file specifications (in Windows notation).

I hacked those temp files in my interceptor as well - and now I'm getting results from

NetBeans! Whoopee!

Yeah, I know, this is totally a waste of time, since using an alternative Subversion client implementation

on Windows is a) trivial to accomplish and b) so much safer than this nightmarish hack of mine, but hey,

at least I learned a couple of things about NetBeans and its SVN integration while geeking out.

A safer fix would be for NetBeans to detect if the version of svn.exe in use is a Cygwin version,

and if so, produce UNIX paths. That fix would probably affect

SvnCommand.java, maybe also some other files.

Without further ado, here's the code of the interceptor.

Obligatory warnings: Makes grown

men cry. Riddled with bugs. Platform-dependent in more ways than I probably realize. And largely untested.

#include <malloc.h> #include <process.h> #include <stdio.h> #include <string.h> #include <syslimits.h> #include <sys/cygwin.h> #include <unistd.h> // Experimental svn interceptor, to help debugging // debug NetBeans vs. Cygwin svn problems. See // http://www.clausbrod.de/Blog/DefinePrivatePublic20100424NetBeansVersusCygwin // for details. // // Claus Brod, April 2010 char *convpath(const char *from) { if (0 == strchr(from, '\\')) { return strdup(from); } ssize_t len = cygwin_conv_path(CCP_WIN_A_TO_POSIX, from, NULL, 0); char *to = (char *) malloc(len); if (0 == cygwin_conv_path(CCP_WIN_A_TO_POSIX, from, to, len)) { return to; } free(to); return NULL; } char *patchfile(const char *from) { FILE *ffrom = fopen(from, "r"); if (!ffrom) return NULL; #define SUFFIX "__hungo" char *to = (char *) malloc(PATH_MAX + sizeof (SUFFIX)); strncpy(to, from, PATH_MAX); strcat(to, SUFFIX); FILE *fto = fopen(to, "w"); if (!fto) { fclose(ffrom); return NULL; } char buf[2048]; while (NULL != fgets(buf, sizeof (buf), ffrom)) { char *converted = convpath(buf); if (converted) { fputs(converted, fto); free(converted); } } fclose(fto); fclose(ffrom); return to; } int main(int argc, char *argv[]) { char **args = (char **) calloc(argc + 1, sizeof (char*)); // original svn client is in /bin args[0] = "/bin/svn.exe"; for (int i = 1; i < argc; i++) { args[i] = convpath(argv[i]); } // look for --targets for (int i = 0; i < argc; i++) { if (0 == strcmp(args[i], "--targets")) { char *to = patchfile(args[i + 1]); if (to) args[i + 1] = to; } } int ret = spawnv(_P_WAIT, args[0], args); // Remove temporary --targets for (int i = 0; i < argc; i++) { if (0 == strcmp(args[i], "--targets")) { unlink(args[i + 1]); } } return ret; }

Usage instructions:

Usage instructions:

- Compile into

svn.exe, using Cygwin version of gcc - Point NetBeans to the interceptor (Tools/Options/Miscellaneous/Versioning/Subversion)

svn in /bin.

This is a debugging tool. Using this in a production environment is a recipe for failure and data loss.

(Did I really have to mention this?  )

)

Thou Shalt Honor RFC-822, Or Not Read Email At All (08 Nov 2009)

- Create a

tempfolder on the IMAP server - Move all messages from the

Inboxto thetempfolder - With all inbox messages out of the way, hit Thunderbird's "Get Mail" button again.

telnet int-mail.ptc.com 143 . login cbrod JOSHUA . select INBOX . fetch 1:100 flagsSurprisingly, IMAP still reported 17 messages, which I then deleted manually as follows:

. store 1:17 flags \Deleted . expunge . closeAnd now, finally, the error message in Thunderbird was gone. Phew. In hindsight, I should have kept those invitation messages around to find out more about their RFC-822 compliance problem. But I guess there is no shortage of meeting invitations in an Outlook-centric company, and so there will be more specimens available for thorough scrutiny

TWiki, KinoSearch and Office 2007 documents (20 Jul 2009)

.docx, .pptx and .xlsx,

i.e. the so-called "Office OpenXML" formats. That's a pity, of course, since

these days, most new Office documents tend to be provided in those formats.

The KinoSearch add-on doesn't try to parse (non-trivial) documents

on its own, but rather relies on external helper programs which extract

indexable text from documents. So the task at hand is to write such

a text extractor.

Fortunately, the Apache POI project just released

a version of their libraries which now also support OpenXML formats, and

with those libraries, it's a piece of cake to build a simple text extractor!

Here's the trivial Java driver code:

package de.clausbrod.openxmlextractor;

import java.io.File;

import org.apache.poi.POITextExtractor;

import org.apache.poi.extractor.ExtractorFactory;

public class Main {

public static String extractOneFile(File f) throws Exception {

POITextExtractor extractor = ExtractorFactory.createExtractor(f);

String extracted = extractor.getText();

return extracted;

}

public static void main(String[] args) throws Exception {

if (args.length <= 0) {

System.err.println("ERROR: No filename specified.");

return;

}

for (String filename : args) {

File f = new File(filename);

System.out.println(extractOneFile(f));

}

}

}

Full Java 1.6 binaries are attached;

Apache POI license details apply.

Copy the ZIP archive to your TWiki server and unzip it in a directory of your choice.

With this tool in place, all we need to do is provide a stringifier plugin to

the add-on. This is done by adding a file called OpenXML.pm to the

lib/TWiki/Contrib/SearchEngineKinoSearchAddOn/StringifierPlugins

directory in the TWiki server installation:

# For licensing info read LICENSE file in the TWiki root. # This program is free software; you can redistribute it and/or # modify it under the terms of the GNU General Public License # as published by the Free Software Foundation; either version 2 # of the License, or (at your option) any later version. # # This program is distributed in the hope that it will be useful, # but WITHOUT ANY WARRANTY; without even the implied warranty of # MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the # GNU General Public License for more details, published at # http://www.gnu.org/copyleft/gpl.html package TWiki::Contrib::SearchEngineKinoSearchAddOn::StringifyPlugins::OpenXML; use base 'TWiki::Contrib::SearchEngineKinoSearchAddOn::StringifyBase'; use File::Temp qw/tmpnam/; __PACKAGE__->register_handler( "application/vnd.openxmlformats-officedocument.spreadsheetml.sheet", ".xlsx"); __PACKAGE__->register_handler( "application/vnd.openxmlformats-officedocument.wordprocessingml.document", ".docx"); __PACKAGE__->register_handler( "application/vnd.openxmlformats-officedocument.presentationml.presentation", ".pptx"); sub stringForFile { my ($self, $file) = @_; my $tmp_file = tmpnam(); my $text; my $cmd = "java -jar /www/twiki/local/bin/openxmlextractor/openxmlextractor.jar '$file' > $tmp_file"; if (0 == system($cmd)) { $text = TWiki::Contrib::SearchEngineKinoSearchAddOn::Stringifier->stringFor($tmp_file); } unlink($tmp_file); return $text; # undef signals failure to caller } 1;This script assumes that the

openxmlextractor.jar helper is located at

/www/twiki/local/bin/openxmlextractor; you'll have to tweak this path to

reflect your local settings.

I haven't figured out yet how to correctly deal with encodings in the stringifier

code, so non-ASCII characters might not work as expected.

To verify local installation, change into /www/twiki/kinosearch/bin (this is

where my TWiki installation is, YMMV) and run the extractor on a test file:

./ks_test stringify foobla.docxAnd in a final step, enable index generation for Office documents by adding

.docx, .pptx and .xlsx to the Main.TWikiPreferences topic:

* KinoSearch settings

* Set KINOSEARCHINDEXEXTENSIONS = .pdf, .xml, .html, .doc, .xls, .ppt, .docx, .pptx, .xlsx

Java-Forum Stuttgart (06 Jul 2009)

But the remaining few of us still had a spirited

discussion, covering topics such as dynamic versus static typing, various Clojure language

elements, Clojure's Lisp heritage, programmimg for concurrency, web frameworks, Ruby on Rails,

and OO databases.

To those who stopped by, thanks a lot for this discussion and for your interest.

And to the developer from Bremen whose name I forgot (sorry): As we suspected, there is

indeed an alternative syntax for creating Java objects in Clojure.

But the remaining few of us still had a spirited

discussion, covering topics such as dynamic versus static typing, various Clojure language

elements, Clojure's Lisp heritage, programmimg for concurrency, web frameworks, Ruby on Rails,

and OO databases.

To those who stopped by, thanks a lot for this discussion and for your interest.

And to the developer from Bremen whose name I forgot (sorry): As we suspected, there is

indeed an alternative syntax for creating Java objects in Clojure.

(.show (new javax.swing.JFrame)) ;; probably more readable for Java programmers (.show (javax.swing.JFrame.)) ;; Clojure shorthand

Speeding through the crisis (22 Apr 2009)

So I'm sticking to the old hardware, and it works great, except for one

thing: It cannot set bookmarks. Sure, it remembers which file I was playing

most recently, but it doesn't know where I was within that file. Without

bookmarks, resuming to listen to that podcast of 40 minutes length which

I started into the other day is an awkward, painstakingly slow and daunting task.

But then, those years at university studying computer science needed to

finally amortize themselves anyway, and so I set out to look for a software solution!

The idea was to preprocess podcasts as follows:

So I'm sticking to the old hardware, and it works great, except for one

thing: It cannot set bookmarks. Sure, it remembers which file I was playing

most recently, but it doesn't know where I was within that file. Without

bookmarks, resuming to listen to that podcast of 40 minutes length which

I started into the other day is an awkward, painstakingly slow and daunting task.

But then, those years at university studying computer science needed to

finally amortize themselves anyway, and so I set out to look for a software solution!

The idea was to preprocess podcasts as follows:

- Split podcasts into five-minute chunks. This way, I can easily resume from where I left off without a lot of hassle.

- While I'm at it, speed up the podcast by 15%. Most podcasts have more than enough verbal fluff and uhms and pauses in them, so listening to them in their original speed is, in fact, a waste of time. Of course, I don't want all my podcasts to sound like Mickey Mouse cartoons, of course, so I need to preserve the original pitch.

- Most of the time, I listen to technical podcasts over el-cheapo headphones in noisy environments like commuter trains, so I don't need no steenkin' 320kbps bitrates, thank you very much.

- And the whole thing needs to run from the command line so that I can process podcasts in batches.

#! /bin/bash

#

# Hacked by Claus Brod,

# http://www.clausbrod.de/Blog/DefinePrivatePublic20090422SpeedingThroughTheCrisis

#

# prepare podcast for mp3 player:

# - speed up by 15%

# - split into small chunks of 5 minutes each

# - recode in low bitrate

#

# requires:

# - lame

# - soundstretch

# - mp3splt

if [ $# -ne 1 ]

then

echo Usage: $0 mp3file >&2

exit 2

fi

bn=`basename "$1"`

bn="${bn%.*}"

lame --priority 0 -S --decode "$1" - | \

soundstretch stdin stdout -tempo=15 | \

lame --priority 0 -S --vbr-new -V 9 - temp.mp3

mp3splt -q -f -t 05.00 -o "${bn}_@n" temp.mp3

rm temp.mp3

The script uses lame,

soundstretch and

mp3splt for the job, so you'll have to download

and install those packages first. On Windows, lame.exe, soundstretch.exe and

mp3splt.exe also need to be accessible through PATH.

The script is, of course, absurdly lame with all its hardcoded filenames and parameters

and all, and it works for MP3 files only - but it does the job for me,

and hopefully it's useful to someone out there as well. Enjoy!

mod_ntlm versus long user names (06 Mar 2009)

[Mon Mar 02 11:37:37 2009] [error] [client 42.42.42.42] 144404120 17144 /twiki/bin/viewauth/Some/Topic - ntlm_decode_msg failed: type: 3, host: "SOMEHOST", user: "", domain: "SOMEDOMAIN", error: 16The server system runs CentOS 5 and Apache 2.2. Note how the log message claims that no user name was provided, even though the user did of course enter their name when the browser prompted for it. The other noteworthy observation in this case was that the user name was unusually long - 17 characters, not including the domain name. However, the NTLM specs I looked up didn't suggest any name length restrictions. Then I looked up the mod_ntlm code - and found the following in the file

ntlmssp.inc.c:

#define MAX_HOSTLEN 32 #define MAX_DOMLEN 32 #define MAX_USERLEN 32Hmmm... so indeed there was a hard limit for the user name length! But then, the user's name had 17 characters, i.e. much less than 32, so shouldn't this still work? The solution is that at least in our case, user names are transmitted in UTF-16 encoding, which means that every character is (at least) two bytes! The lazy kind of coder that I am, I simply doubled all hardcoded limits, recompiled, and my authentication woes were over! Well, almost: Before reinstalling mod_ntlm, I also had to tweak its Makefile slightly as follows:

*** Makefile 2009/03/02 18:02:20 1.1 --- Makefile 2009/03/04 15:55:57 *************** *** 17,23 **** # install the shared object file into Apache install: all ! $(APXS) -i -a -n 'ntlm' mod_ntlm.so # cleanup clean: --- 17,23 ---- # install the shared object file into Apache install: all ! $(APXS) -i -a -n 'ntlm' mod_ntlm.la # cleanup clean:Hope this is useful to someone out there! And while we're at it, here are some links to related articles:

- http://blog.rot13.org/2005/11/mod_ntlm_and_keepalive.html

- http://twiki.org/cgi-bin/view/Plugins/TinyMCEPluginDev

Honey, where did you put the wireless LAN cable? (13 Jan 2007)

But I digress.

I don't mean to bash the SuSE community, not by any means, but at least

the 10.1 distribution apparently was targeted at more recent and more powerful

hardware than I had at my disposal. I hear that 10.2 has a new package manager

which is probably snappier than what I used, so maybe I would have had more

luck with that version.

Anyway, I was frustrated enough to consider other distros now. These days,

of course, you can't even shop at your local grocery store without overhearing the

shop assistants discussing the latest version of Ubuntu. Who am I to resist

such powerful word-of-mouth marketing?

And I'm glad I didn't resist. Ubuntu 6.10 (Edgy Eft) installed quickly

and with only a few mouse clicks and user inputs. Interactive performance

after installation was a lot better than with openSUSE, and Ubuntu's

"Synaptic" package manager was fast and simple enough to use so that

even I grokked it immediately.

The only part of the installation that was still giving me fits was WLAN

access. The Omnibook 6000 itself doesn't have any WLAN hardware, but I still

had two WLAN adapters, a Vigor 510

and a Vigor 520,

both from Draytek. The Vigor 510 is a USB device, while the Vigor 520

uses the PCMCIA slot.

I spent an awful lot of time trying to install the Vigor 510. Drove me nuts.

I know a lot more about Linux networking now than I ever cared for. Here are

some of the documents I used for this noble endeavour:

But I digress.

I don't mean to bash the SuSE community, not by any means, but at least

the 10.1 distribution apparently was targeted at more recent and more powerful

hardware than I had at my disposal. I hear that 10.2 has a new package manager

which is probably snappier than what I used, so maybe I would have had more

luck with that version.

Anyway, I was frustrated enough to consider other distros now. These days,

of course, you can't even shop at your local grocery store without overhearing the

shop assistants discussing the latest version of Ubuntu. Who am I to resist

such powerful word-of-mouth marketing?

And I'm glad I didn't resist. Ubuntu 6.10 (Edgy Eft) installed quickly

and with only a few mouse clicks and user inputs. Interactive performance

after installation was a lot better than with openSUSE, and Ubuntu's

"Synaptic" package manager was fast and simple enough to use so that

even I grokked it immediately.

The only part of the installation that was still giving me fits was WLAN

access. The Omnibook 6000 itself doesn't have any WLAN hardware, but I still

had two WLAN adapters, a Vigor 510

and a Vigor 520,

both from Draytek. The Vigor 510 is a USB device, while the Vigor 520

uses the PCMCIA slot.

I spent an awful lot of time trying to install the Vigor 510. Drove me nuts.

I know a lot more about Linux networking now than I ever cared for. Here are

some of the documents I used for this noble endeavour:

- WLAN-NG

- "Wlan-Vigor 510 unter Ubuntu" at linuxforen.de

- WLAN-NG unter Ubuntu installieren (plastic-spoon.de)

- WifiDocs/Driver/prism2_usb at help.ubuntu.com

- http://wiki.ubuntuusers.de/WLAN

- http://wiki.ubuntuusers.de/WLAN/NdisWrapper

- http://wiki.ubuntuusers.de/WLAN/Installation

- http://wiki.ubuntuusers.de/WLAN/wireless-tools

- http://wiki.ubuntuusers.de/interfaces

- Wireless HOWTO

- WifiDocs/Driver/Ndiswrapper

- Ubuntu und WLAN mit WPA und ndiswrapper

- http://linux-wless.passys.nl/query_part.php?brandname=DrayTek

/etc/network/interfaces right:

auto wlan1 iface wlan1 inet dhcp wireless yes wireless-mode managed wireless-essid FRITZ!Box Trallala wireless-key open s:1234567890123So except for the WLAN adapters, Ubuntu installation was a breeze, mostly because it's a nice distribution, but also because the Ubuntu community has in fact produced a lot of helpful documentation and guidelines which were written for the average geek, not for the Linux rockstars. I found the whole experience encouraging enough to install Ubuntu on an old system at work so that we can use it as a fileserver - but that's another story...

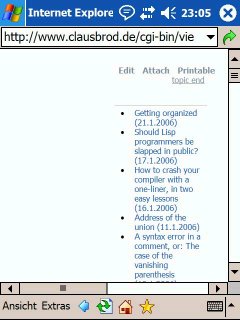

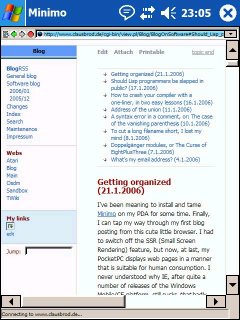

Getting organized (21.1.2006)

I've been meaning to install and tame Minimo on my PDA for some time. Finally, I can tap my way through my first blog posting from this cute little browser. I had to switch off the SSR (Small Screen Rendering) feature, but now, at last, my PocketPC displays web pages in a manner that is suitable for human consumption. I never understood why IE, after quite a number of releases of the Windows Mobile/CE platform, still sucks that badly as a browser. Typing with the stylus is a pain in the youknowwhere, so I probably won't be blogging from my PDA that often But still, I love the Minimo browser, even though it is in its

early infancy. It seems to do a much better job at displaying most web sites than IE;

it groks the CSS-based layout of my own web site; it makes use of the VGA screen on

my PDA; and I can now even use TWiki's direct editing facilities from my organizer.

This project really makes me wonder what it would take to start developing for the PocketPC platform...

But still, I love the Minimo browser, even though it is in its

early infancy. It seems to do a much better job at displaying most web sites than IE;

it groks the CSS-based layout of my own web site; it makes use of the VGA screen on

my PDA; and I can now even use TWiki's direct editing facilities from my organizer.

This project really makes me wonder what it would take to start developing for the PocketPC platform...

|

|

to top

Edit | Attach image or document | Printable version | Raw text | Refresh | More topic actions

Revisions: | r1.12 | > | r1.11 | > | r1.10 | Total page history | Backlinks

Revisions: | r1.12 | > | r1.11 | > | r1.10 | Total page history | Backlinks

Blog

Blog